|

Tpetra parallel linear algebra

Version of the Day

|

|

Tpetra parallel linear algebra

Version of the Day

|

Sparse matrix whose entries are small dense square blocks, all of the same dimensions. More...

#include <Tpetra_Experimental_BlockCrsMatrix_decl.hpp>

Public Types | |

Public typedefs | |

| using | scalar_type = Scalar |

| The type of entries in the matrix (that is, of each entry in each block). More... | |

| using | impl_scalar_type = typename BMV::impl_scalar_type |

| The implementation type of entries in the matrix. More... | |

| typedef LO | local_ordinal_type |

| The type of local indices. More... | |

| typedef GO | global_ordinal_type |

| The type of global indices. More... | |

| typedef Node | node_type |

| The Node type. More... | |

| typedef Node::device_type | device_type |

| The Kokkos::Device specialization that this class uses. More... | |

| typedef device_type::execution_space | execution_space |

| The Kokkos execution space that this class uses. More... | |

| typedef device_type::memory_space | memory_space |

| The Kokkos memory space that this class uses. More... | |

| typedef ::Tpetra::Map< LO, GO, node_type > | map_type |

| The implementation of Map that this class uses. More... | |

| typedef ::Tpetra::MultiVector < Scalar, LO, GO, node_type > | mv_type |

| The implementation of MultiVector that this class uses. More... | |

| typedef ::Tpetra::CrsGraph< LO, GO, node_type > | crs_graph_type |

| The implementation of CrsGraph that this class uses. More... | |

| typedef Kokkos::View < impl_scalar_type **, Kokkos::LayoutRight, device_type, Kokkos::MemoryTraits < Kokkos::Unmanaged > > | little_block_type |

| The type used to access nonconst matrix blocks. More... | |

| typedef Kokkos::View< const impl_scalar_type **, Kokkos::LayoutRight, device_type, Kokkos::MemoryTraits < Kokkos::Unmanaged > > | const_little_block_type |

| The type used to access const matrix blocks. More... | |

| typedef Kokkos::View < impl_scalar_type *, Kokkos::LayoutRight, device_type, Kokkos::MemoryTraits < Kokkos::Unmanaged > > | little_vec_type |

| The type used to access nonconst vector blocks. More... | |

| typedef Kokkos::View< const impl_scalar_type *, Kokkos::LayoutRight, device_type, Kokkos::MemoryTraits < Kokkos::Unmanaged > > | const_little_vec_type |

| The type used to access const vector blocks. More... | |

Typedefs | |

| typedef MultiVector< Scalar, LO, GO, Node >::mag_type | mag_type |

| Type of a norm result. More... | |

Public Member Functions | |

| virtual Teuchos::RCP< const Teuchos::Comm< int > > | getComm () const |

| The communicator over which this matrix is distributed. More... | |

| virtual global_size_t | getGlobalNumCols () const |

| The global number of columns of this matrix. More... | |

| virtual size_t | getNodeNumCols () const |

| The number of columns needed to apply the forward operator on this node. More... | |

| virtual GO | getIndexBase () const |

| The index base for global indices in this matrix. More... | |

| virtual global_size_t | getGlobalNumEntries () const |

| The global number of stored (structurally nonzero) entries. More... | |

| virtual size_t | getNodeNumEntries () const |

| The local number of stored (structurally nonzero) entries. More... | |

| virtual size_t | getNumEntriesInGlobalRow (GO globalRow) const |

| The current number of entries on the calling process in the specified global row. More... | |

| virtual size_t | getGlobalMaxNumRowEntries () const |

| The maximum number of entries in any row over all processes in the matrix's communicator. More... | |

| virtual bool | hasColMap () const |

| Whether this matrix has a well-defined column Map. More... | |

| virtual bool | isLocallyIndexed () const |

| Whether matrix indices are locally indexed. More... | |

| virtual bool | isGloballyIndexed () const |

| Whether matrix indices are globally indexed. More... | |

| virtual bool | isFillComplete () const |

| Whether fillComplete() has been called. More... | |

| virtual bool | supportsRowViews () const |

| Whether this object implements getLocalRowView() and getGlobalRowView(). More... | |

Constructors and destructor | |

| BlockCrsMatrix () | |

| Default constructor: Makes an empty block matrix. More... | |

| BlockCrsMatrix (const crs_graph_type &graph, const LO blockSize) | |

| Constructor that takes a graph and a block size. More... | |

| BlockCrsMatrix (const crs_graph_type &graph, const map_type &domainPointMap, const map_type &rangePointMap, const LO blockSize) | |

| Constructor that takes a graph, domain and range point Maps, and a block size. More... | |

| virtual | ~BlockCrsMatrix () |

| Destructor (declared virtual for memory safety). More... | |

Implementation of Tpetra::Operator | |

| Teuchos::RCP< const map_type > | getDomainMap () const |

| Get the (point) domain Map of this matrix. More... | |

| Teuchos::RCP< const map_type > | getRangeMap () const |

| Get the (point) range Map of this matrix. More... | |

| Teuchos::RCP< const map_type > | getRowMap () const |

| get the (mesh) map for the rows of this block matrix. More... | |

| Teuchos::RCP< const map_type > | getColMap () const |

| get the (mesh) map for the columns of this block matrix. More... | |

| global_size_t | getGlobalNumRows () const |

| get the global number of block rows More... | |

| size_t | getNodeNumRows () const |

| get the local number of block rows More... | |

| size_t | getNodeMaxNumRowEntries () const |

| Maximum number of entries in any row of the matrix, on this process. More... | |

| void | apply (const mv_type &X, mv_type &Y, Teuchos::ETransp mode=Teuchos::NO_TRANS, Scalar alpha=Teuchos::ScalarTraits< Scalar >::one(), Scalar beta=Teuchos::ScalarTraits< Scalar >::zero()) const |

For this matrix A, compute Y := beta * Y + alpha * Op(A) * X. More... | |

| bool | hasTransposeApply () const |

| Whether it is valid to apply the transpose or conjugate transpose of this matrix. More... | |

| void | setAllToScalar (const Scalar &alpha) |

Set all matrix entries equal to alpha. More... | |

Implementation of Teuchos::Describable | |

| std::string | description () const |

| One-line description of this object. More... | |

| void | describe (Teuchos::FancyOStream &out, const Teuchos::EVerbosityLevel verbLevel) const |

| Print a description of this object to the given output stream. More... | |

Implementation of "dual view semantics" | |

| void | modify_host () |

| Mark the matrix's valueas as modified in host space. More... | |

| void | modify_device () |

| Mark the matrix's valueas as modified in device space. More... | |

| template<class MemorySpace > | |

| void | modify () |

| Mark the matrix's values as modified in the given memory space. More... | |

| bool | need_sync_host () const |

| Whether the matrix's values need sync'ing to host space. More... | |

| bool | need_sync_device () const |

| Whether the matrix's values need sync'ing to device space. More... | |

| template<class MemorySpace > | |

| bool | need_sync () const |

| Whether the matrix's values need sync'ing to the given memory space. More... | |

| void | sync_host () |

| Sync the matrix's values to host space. More... | |

| void | sync_device () |

| Sync the matrix's values to device space. More... | |

| template<class MemorySpace > | |

| void | sync () |

| Sync the matrix's values to the given memory space. More... | |

|

Kokkos::DualView < impl_scalar_type *, device_type >::t_host | getValuesHost () const |

|

Kokkos::DualView < impl_scalar_type *, device_type >::t_dev | getValuesDevice () const |

| template<class MemorySpace > | |

| std::conditional< is_cuda < MemorySpace >::value, typename Kokkos::DualView < impl_scalar_type *, device_type >::t_dev, typename Kokkos::DualView < impl_scalar_type *, device_type >::t_host > ::type | getValues () |

Get the host or device View of the matrix's values (val_). More... | |

Extraction Methods | |

| virtual void | getGlobalRowCopy (GO GlobalRow, const Teuchos::ArrayView< GO > &Indices, const Teuchos::ArrayView< Scalar > &Values, size_t &NumEntries) const |

| Get a copy of the given global row's entries. More... | |

| virtual void | getGlobalRowView (GO GlobalRow, Teuchos::ArrayView< const GO > &indices, Teuchos::ArrayView< const Scalar > &values) const |

| Get a constant, nonpersisting, globally indexed view of the given row of the matrix. More... | |

| virtual void | getLocalDiagCopy (::Tpetra::Vector< Scalar, LO, GO, Node > &diag) const |

| Get a copy of the diagonal entries, distributed by the row Map. More... | |

Mathematical methods | |

| virtual void | leftScale (const ::Tpetra::Vector< Scalar, LO, GO, Node > &x) |

| Scale the RowMatrix on the left with the given Vector x. More... | |

| virtual void | rightScale (const ::Tpetra::Vector< Scalar, LO, GO, Node > &x) |

| Scale the RowMatrix on the right with the given Vector x. More... | |

| virtual typename::Tpetra::RowMatrix < Scalar, LO, GO, Node > ::mag_type | getFrobeniusNorm () const |

| The Frobenius norm of the matrix. More... | |

Extraction Methods | |

| virtual LO | getLocalRowViewRaw (const LOlclRow, LO &numEnt, const LO *&lclColInds, const Scalar *&vals) const |

| Get a constant, nonpersisting, locally indexed view of the given row of the matrix, using "raw" pointers instead of Teuchos::ArrayView. More... | |

| virtual void | getLocalDiagCopy (Vector< Scalar, LO, GO, Node > &diag) const =0 |

| Get a copy of the diagonal entries, distributed by the row Map. More... | |

Mathematical methods | |

| virtual void | leftScale (const Vector< Scalar, LO, GO, Node > &x)=0 |

| Scale the matrix on the left with the given Vector. More... | |

| virtual void | rightScale (const Vector< Scalar, LO, GO, Node > &x)=0 |

| Scale the matrix on the right with the given Vector. More... | |

| virtual Teuchos::RCP < RowMatrix< Scalar, LO, GO, Node > > | add (const Scalar &alpha, const RowMatrix< Scalar, LO, GO, Node > &A, const Scalar &beta, const Teuchos::RCP< const Map< LO, GO, Node > > &domainMap=Teuchos::null, const Teuchos::RCP< const Map< LO, GO, Node > > &rangeMap=Teuchos::null, const Teuchos::RCP< Teuchos::ParameterList > ¶ms=Teuchos::null) const |

Return a new RowMatrix which is the result of beta*this + alpha*A. More... | |

Implementation of Packable interface | |

| virtual void | pack (const Teuchos::ArrayView< const LO > &exportLIDs, Teuchos::Array< char > &exports, const Teuchos::ArrayView< size_t > &numPacketsPerLID, size_t &constantNumPackets, Distributor &distor) const |

| Pack this object's data for an Import or Export. More... | |

Pure virtual functions to be overridden by subclasses. | |

| virtual void | apply (const MultiVector< Scalar, LO, GO, Node > &X, MultiVector< Scalar, LO, GO, Node > &Y, Teuchos::ETransp mode=Teuchos::NO_TRANS, Scalar alpha=Teuchos::ScalarTraits< Scalar >::one(), Scalar beta=Teuchos::ScalarTraits< Scalar >::zero()) const =0 |

| Computes the operator-multivector application. More... | |

Public methods for redistributing data | |

| void | doImport (const SrcDistObject &source, const Import< LO, GO, Node > &importer, const CombineMode CM, const bool restrictedMode=false) |

| Import data into this object using an Import object ("forward mode"). More... | |

| void | doImport (const SrcDistObject &source, const Export< LO, GO, Node > &exporter, const CombineMode CM, const bool restrictedMode=false) |

| Import data into this object using an Export object ("reverse mode"). More... | |

| void | doExport (const SrcDistObject &source, const Export< LO, GO, Node > &exporter, const CombineMode CM, const bool restrictedMode=false) |

| Export data into this object using an Export object ("forward mode"). More... | |

| void | doExport (const SrcDistObject &source, const Import< LO, GO, Node > &importer, const CombineMode CM, const bool restrictedMode=false) |

| Export data into this object using an Import object ("reverse mode"). More... | |

Attribute accessor methods | |

| bool | isDistributed () const |

| Whether this is a globally distributed object. More... | |

| virtual Teuchos::RCP< const map_type > | getMap () const |

| The Map describing the parallel distribution of this object. More... | |

I/O methods | |

| void | print (std::ostream &os) const |

| Print this object to the given output stream. More... | |

Methods for use only by experts | |

| virtual void | removeEmptyProcessesInPlace (const Teuchos::RCP< const map_type > &newMap) |

| Remove processes which contain no entries in this object's Map. More... | |

Protected Types | |

| typedef char | packet_type |

| Implementation detail; tells. More... | |

Protected Member Functions | |

| virtual size_t | constantNumberOfPackets () const |

| Whether the implementation's instance promises always to have a constant number of packets per LID (local index), and if so, how many packets per LID there are. More... | |

| virtual void | doTransfer (const SrcDistObject &src, const ::Tpetra::Details::Transfer< local_ordinal_type, global_ordinal_type, node_type > &transfer, const char modeString[], const ReverseOption revOp, const CombineMode CM, const bool restrictedMode) |

| Redistribute data across (MPI) processes. More... | |

| virtual bool | reallocArraysForNumPacketsPerLid (const size_t numExportLIDs, const size_t numImportLIDs) |

| Reallocate numExportPacketsPerLID_ and/or numImportPacketsPerLID_, if necessary. More... | |

| virtual void | doTransferNew (const SrcDistObject &src, const CombineMode CM, const size_t numSameIDs, const Kokkos::DualView< const local_ordinal_type *, buffer_device_type > &permuteToLIDs, const Kokkos::DualView< const local_ordinal_type *, buffer_device_type > &permuteFromLIDs, const Kokkos::DualView< const local_ordinal_type *, buffer_device_type > &remoteLIDs, const Kokkos::DualView< const local_ordinal_type *, buffer_device_type > &exportLIDs, Distributor &distor, const ReverseOption revOp, const bool commOnHost, const bool restrictedMode) |

| Implementation detail of doTransfer. More... | |

Methods implemented by subclasses and used by doTransfer(). | |

The doTransfer() method uses the subclass' implementations of these methods to implement data transfer. Subclasses of DistObject must implement these methods. This is an instance of the Template Method Pattern. ("Template" here doesn't mean "C++ template"; it means "pattern with holes that are filled in by the subclass' method implementations.") | |

| virtual bool | checkSizes (const SrcDistObject &source)=0 |

| Compare the source and target (this) objects for compatibility. More... | |

| virtual void | copyAndPermute (const SrcDistObject &source, const size_t numSameIDs, const Kokkos::DualView< const local_ordinal_type *, buffer_device_type > &permuteToLIDs, const Kokkos::DualView< const local_ordinal_type *, buffer_device_type > &permuteFromLIDs) |

| Perform copies and permutations that are local to the calling (MPI) process. More... | |

| virtual void | packAndPrepare (const SrcDistObject &source, const Kokkos::DualView< const local_ordinal_type *, buffer_device_type > &exportLIDs, Kokkos::DualView< packet_type *, buffer_device_type > &exports, Kokkos::DualView< size_t *, buffer_device_type > numPacketsPerLID, size_t &constantNumPackets, Distributor &distor) |

| Pack data and metadata for communication (sends). More... | |

| virtual void | unpackAndCombine (const Kokkos::DualView< const local_ordinal_type *, buffer_device_type > &importLIDs, Kokkos::DualView< packet_type *, buffer_device_type > imports, Kokkos::DualView< size_t *, buffer_device_type > numPacketsPerLID, const size_t constantNumPackets, Distributor &distor, const CombineMode combineMode) |

| Perform any unpacking and combining after communication. More... | |

| bool | reallocImportsIfNeeded (const size_t newSize, const bool verbose, const std::string *prefix) |

| Reallocate imports_ if needed. More... | |

| Teuchos::RCP< const map_type > | map_ |

| The Map over which this object is distributed. More... | |

| Kokkos::DualView< packet_type *, buffer_device_type > | imports_ |

| Buffer into which packed data are imported (received from other processes). More... | |

| Kokkos::DualView< size_t *, buffer_device_type > | numImportPacketsPerLID_ |

| Number of packets to receive for each receive operation. More... | |

| Kokkos::DualView< packet_type *, buffer_device_type > | exports_ |

| Buffer from which packed data are exported (sent to other processes). More... | |

| Kokkos::DualView< size_t *, buffer_device_type > | numExportPacketsPerLID_ |

| Number of packets to send for each send operation. More... | |

Block operations | |

| LO | getBlockSize () const |

| The number of degrees of freedom per mesh point. More... | |

| virtual Teuchos::RCP< const ::Tpetra::RowGraph< LO, GO, Node > > | getGraph () const |

| Get the (mesh) graph. More... | |

| const crs_graph_type & | getCrsGraph () const |

| void | applyBlock (const BlockMultiVector< Scalar, LO, GO, Node > &X, BlockMultiVector< Scalar, LO, GO, Node > &Y, Teuchos::ETransp mode=Teuchos::NO_TRANS, const Scalar alpha=Teuchos::ScalarTraits< Scalar >::one(), const Scalar beta=Teuchos::ScalarTraits< Scalar >::zero()) |

| Version of apply() that takes BlockMultiVector input and output. More... | |

| void | gaussSeidelCopy (MultiVector< Scalar, LO, GO, Node > &X, const ::Tpetra::MultiVector< Scalar, LO, GO, Node > &B, const ::Tpetra::MultiVector< Scalar, LO, GO, Node > &D, const Scalar &dampingFactor, const ESweepDirection direction, const int numSweeps, const bool zeroInitialGuess) const |

| Version of gaussSeidel(), with fewer requirements on X. More... | |

| void | reorderedGaussSeidelCopy (::Tpetra::MultiVector< Scalar, LO, GO, Node > &X, const ::Tpetra::MultiVector< Scalar, LO, GO, Node > &B, const ::Tpetra::MultiVector< Scalar, LO, GO, Node > &D, const Teuchos::ArrayView< LO > &rowIndices, const Scalar &dampingFactor, const ESweepDirection direction, const int numSweeps, const bool zeroInitialGuess) const |

| Version of reorderedGaussSeidel(), with fewer requirements on X. More... | |

| void | localGaussSeidel (const BlockMultiVector< Scalar, LO, GO, Node > &Residual, BlockMultiVector< Scalar, LO, GO, Node > &Solution, const Kokkos::View< impl_scalar_type ***, device_type, Kokkos::MemoryUnmanaged > &D_inv, const Scalar &omega, const ESweepDirection direction) const |

| Local Gauss-Seidel solve, given a factorized diagonal. More... | |

| LO | replaceLocalValues (const LO localRowInd, const LO colInds[], const Scalar vals[], const LO numColInds) const |

| Replace values at the given (mesh, i.e., block) column indices, in the given (mesh, i.e., block) row. More... | |

| LO | sumIntoLocalValues (const LO localRowInd, const LO colInds[], const Scalar vals[], const LO numColInds) const |

| Sum into values at the given (mesh, i.e., block) column indices, in the given (mesh, i.e., block) row. More... | |

| LO | getLocalRowView (const LO localRowInd, const LO *&colInds, Scalar *&vals, LO &numInds) const |

| Get a view of the (mesh, i.e., block) row, using local (mesh, i.e., block) indices. More... | |

| void | getLocalRowView (LO LocalRow, Teuchos::ArrayView< const LO > &indices, Teuchos::ArrayView< const Scalar > &values) const |

| Not implemented. More... | |

| void | getLocalRowCopy (LO LocalRow, const Teuchos::ArrayView< LO > &Indices, const Teuchos::ArrayView< Scalar > &Values, size_t &NumEntries) const |

| Not implemented. More... | |

| little_block_type | getLocalBlock (const LO localRowInd, const LO localColInd) const |

| LO | getLocalRowOffsets (const LO localRowInd, ptrdiff_t offsets[], const LO colInds[], const LO numColInds) const |

| Get relative offsets corresponding to the given rows, given by local row index. More... | |

| LO | replaceLocalValuesByOffsets (const LO localRowInd, const ptrdiff_t offsets[], const Scalar vals[], const LO numOffsets) const |

| Like replaceLocalValues, but avoids computing row offsets. More... | |

| LO | sumIntoLocalValuesByOffsets (const LO localRowInd, const ptrdiff_t offsets[], const Scalar vals[], const LO numOffsets) const |

| Like sumIntoLocalValues, but avoids computing row offsets. More... | |

| size_t | getNumEntriesInLocalRow (const LO localRowInd) const |

| Return the number of entries in the given row on the calling process. More... | |

| bool | localError () const |

| Whether this object had an error on the calling process. More... | |

| std::string | errorMessages () const |

| The current stream of error messages. More... | |

| void | getLocalDiagOffsets (const Kokkos::View< size_t *, device_type, Kokkos::MemoryUnmanaged > &offsets) const |

| Get offsets of the diagonal entries in the matrix. More... | |

| void | getLocalDiagCopy (const Kokkos::View< impl_scalar_type ***, device_type, Kokkos::MemoryUnmanaged > &diag, const Kokkos::View< const size_t *, device_type, Kokkos::MemoryUnmanaged > &offsets) const |

| Variant of getLocalDiagCopy() that uses precomputed offsets and puts diagonal blocks in a 3-D Kokkos::View. More... | |

| void | getLocalDiagCopy (const Kokkos::View< impl_scalar_type ***, device_type, Kokkos::MemoryUnmanaged > &diag, const Teuchos::ArrayView< const size_t > &offsets) const |

| Variant of getLocalDiagCopy() that uses precomputed offsets and puts diagonal blocks in a 3-D Kokkos::View. More... | |

| LO | absMaxLocalValues (const LO localRowInd, const LO colInds[], const Scalar vals[], const LO numColInds) const |

| Like sumIntoLocalValues, but for the ABSMAX combine mode. More... | |

| LO | absMaxLocalValuesByOffsets (const LO localRowInd, const ptrdiff_t offsets[], const Scalar vals[], const LO numOffsets) const |

| Like sumIntoLocalValuesByOffsets, but for the ABSMAX combine mode. More... | |

Implementation of Tpetra::DistObject. | |

The methods here implement Tpetra::DistObject. They let BlockMultiVector participate in Import and Export operations. Users don't have to worry about these methods. | |

| using | buffer_device_type = typename DistObject< Scalar, LO, GO, Node >::buffer_device_type |

| Kokkos::Device specialization for communication buffers. More... | |

| virtual bool | checkSizes (const ::Tpetra::SrcDistObject &source) |

| virtual void | copyAndPermute (const SrcDistObject &sourceObj, const size_t numSameIDs, const Kokkos::DualView< const local_ordinal_type *, buffer_device_type > &permuteToLIDs, const Kokkos::DualView< const local_ordinal_type *, buffer_device_type > &permuteFromLIDs) |

| virtual void | packAndPrepare (const SrcDistObject &sourceObj, const Kokkos::DualView< const local_ordinal_type *, buffer_device_type > &exportLIDs, Kokkos::DualView< packet_type *, buffer_device_type > &exports, Kokkos::DualView< size_t *, buffer_device_type > numPacketsPerLID, size_t &constantNumPackets, Distributor &) |

| virtual void | unpackAndCombine (const Kokkos::DualView< const local_ordinal_type *, buffer_device_type > &importLIDs, Kokkos::DualView< packet_type *, buffer_device_type > imports, Kokkos::DualView< size_t *, buffer_device_type > numPacketsPerLID, const size_t constantNumPackets, Distributor &, const CombineMode combineMode) |

Sparse matrix whose entries are small dense square blocks, all of the same dimensions.

| Scalar | The type of the numerical entries of the matrix. (You can use real-valued or complex-valued types here, unlike in Epetra, where the scalar type is always double.) |

| LO | The type of local indices. See the documentation of the first template parameter of Map for requirements. |

| GO | The type of global indices. See the documentation of the second template parameter of Map for requirements. |

| Node | The Kokkos Node type. See the documentation of the third template parameter of Map for requirements. |

Please read the documentation of BlockMultiVector first.

This class implements a sparse matrix whose entries are small dense square blocks, all of the same dimensions. The intended application is to store the discretization of a partial differential equation with multiple degrees of freedom per mesh point, where all mesh points have the same number of degrees of freedom. This class stores values associated with the degrees of freedom of a single mesh point contiguously, in a getBlockSize() by getBlockSize() block, in row-major format. The matrix's graph represents the mesh points, with one entry per mesh point. This saves storage over using a CrsMatrix, which requires one graph entry per matrix entry.

This class requires a fill-complete Tpetra::CrsGraph for construction. Thus, it has a row Map and a column Map already. As a result, BlockCrsMatrix only needs to provide access using local indices. Access using local indices is faster anyway, since conversion from global to local indices requires a hash table lookup per index. Users are responsible for converting from global to local indices if necessary. Please be aware that the row Map and column Map may differ, so you may not use local row and column indices interchangeably.

We reserve the right to change the block layout in the future. Best practice is to use this class' little_block_type and const_little_block_type typedefs to access blocks, since their types offer access to entries in a layout-independent way. These two typedefs are both Kokkos::View specializations.

Here is an example of how to fill into this object using raw-pointer views.

Definition at line 137 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

protected |

Implementation detail; tells.

Definition at line 148 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| using Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::scalar_type = Scalar |

The type of entries in the matrix (that is, of each entry in each block).

Definition at line 155 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| using Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::impl_scalar_type = typename BMV::impl_scalar_type |

The implementation type of entries in the matrix.

Letting scalar_type and impl_scalar_type differ helps this class work correctly for Scalar types like std::complex<T>, which lack the necessary CUDA device macros and volatile overloads to work correctly with Kokkos.

Definition at line 163 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef LO Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::local_ordinal_type |

The type of local indices.

Definition at line 166 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef GO Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::global_ordinal_type |

The type of global indices.

Definition at line 168 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef Node Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::node_type |

The Node type.

Prefer device_type, execution_space, and memory_space (see below), which relate directly to Kokkos.

Definition at line 173 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef Node::device_type Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::device_type |

The Kokkos::Device specialization that this class uses.

Definition at line 176 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef device_type::execution_space Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::execution_space |

The Kokkos execution space that this class uses.

Definition at line 178 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef device_type::memory_space Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::memory_space |

The Kokkos memory space that this class uses.

Definition at line 180 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef ::Tpetra::Map<LO, GO, node_type> Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::map_type |

The implementation of Map that this class uses.

Definition at line 183 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef ::Tpetra::MultiVector<Scalar, LO, GO, node_type> Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::mv_type |

The implementation of MultiVector that this class uses.

Definition at line 185 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef ::Tpetra::CrsGraph<LO, GO, node_type> Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::crs_graph_type |

The implementation of CrsGraph that this class uses.

Definition at line 187 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef Kokkos::View<impl_scalar_type**, Kokkos::LayoutRight, device_type, Kokkos::MemoryTraits<Kokkos::Unmanaged> > Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::little_block_type |

The type used to access nonconst matrix blocks.

Definition at line 194 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef Kokkos::View<const impl_scalar_type**, Kokkos::LayoutRight, device_type, Kokkos::MemoryTraits<Kokkos::Unmanaged> > Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::const_little_block_type |

The type used to access const matrix blocks.

Definition at line 200 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef Kokkos::View<impl_scalar_type*, Kokkos::LayoutRight, device_type, Kokkos::MemoryTraits<Kokkos::Unmanaged> > Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::little_vec_type |

The type used to access nonconst vector blocks.

Definition at line 206 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| typedef Kokkos::View<const impl_scalar_type*, Kokkos::LayoutRight, device_type, Kokkos::MemoryTraits<Kokkos::Unmanaged> > Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::const_little_vec_type |

The type used to access const vector blocks.

Definition at line 212 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

protected |

Kokkos::Device specialization for communication buffers.

See #1088 for why this is not just device_type::device_type.

Definition at line 747 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

inherited |

Type of a norm result.

This is usually the same as the type of the magnitude (absolute value) of Scalar, but may differ for certain Scalar types.

Definition at line 106 of file Tpetra_RowMatrix_decl.hpp.

| Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::BlockCrsMatrix | ( | ) |

Default constructor: Makes an empty block matrix.

Definition at line 706 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::BlockCrsMatrix | ( | const crs_graph_type & | graph, |

| const LO | blockSize | ||

| ) |

Constructor that takes a graph and a block size.

The graph represents the mesh. This constructor computes the point Maps corresponding to the given graph's domain and range Maps. If you already have those point Maps, it is better to call the four-argument constructor.

| graph | [in] A fill-complete graph. |

| blockSize | [in] Number of degrees of freedom per mesh point. |

Definition at line 721 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::BlockCrsMatrix | ( | const crs_graph_type & | graph, |

| const map_type & | domainPointMap, | ||

| const map_type & | rangePointMap, | ||

| const LO | blockSize | ||

| ) |

Constructor that takes a graph, domain and range point Maps, and a block size.

The graph represents the mesh. This constructor uses the given domain and range point Maps, rather than computing them. The given point Maps must be the same as the above two-argument constructor would have computed.

Definition at line 779 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

inlinevirtual |

Destructor (declared virtual for memory safety).

Definition at line 245 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

virtual |

Get the (point) domain Map of this matrix.

Implements Tpetra::Operator< Scalar, LO, GO, Node >.

Definition at line 839 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Get the (point) range Map of this matrix.

Implements Tpetra::Operator< Scalar, LO, GO, Node >.

Definition at line 848 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

get the (mesh) map for the rows of this block matrix.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 857 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

get the (mesh) map for the columns of this block matrix.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 865 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

get the global number of block rows

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 873 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

get the local number of block rows

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 881 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Maximum number of entries in any row of the matrix, on this process.

This method only uses the matrix's graph. Explicitly stored zeros count as "entries."

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 889 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| void Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::apply | ( | const mv_type & | X, |

| mv_type & | Y, | ||

| Teuchos::ETransp | mode = Teuchos::NO_TRANS, |

||

| Scalar | alpha = Teuchos::ScalarTraits<Scalar>::one (), |

||

| Scalar | beta = Teuchos::ScalarTraits<Scalar>::zero () |

||

| ) | const |

For this matrix A, compute Y := beta * Y + alpha * Op(A) * X.

Op(A) is A if mode is Teuchos::NO_TRANS, the transpose of A if mode is Teuchos::TRANS, and the conjugate transpose of A if mode is Teuchos::CONJ_TRANS.

If alpha is zero, ignore X's entries on input; if beta is zero, ignore Y's entries on input. This follows the BLAS convention, and only matters if X resp. Y have Inf or NaN entries.

Definition at line 897 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

inlinevirtual |

Whether it is valid to apply the transpose or conjugate transpose of this matrix.

Reimplemented from Tpetra::Operator< Scalar, LO, GO, Node >.

Definition at line 289 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| void Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::setAllToScalar | ( | const Scalar & | alpha | ) |

Set all matrix entries equal to alpha.

Definition at line 990 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

One-line description of this object.

Reimplemented from Tpetra::DistObject< char, LO, GO, Node >.

Definition at line 3442 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Print a description of this object to the given output stream.

| out | [out] Output stream to which to print. Valid values include Teuchos::VERB_DEFAULT, Teuchos::VERB_NONE, Teuchos::VERB_LOW, Teuchos::VERB_MEDIUM, Teuchos::VERB_HIGH, and Teuchos::VERB_EXTREME. |

| verbLevel | [in] Verbosity level at which to print. |

The following pseudocode shows how to wrap your std::ostream object in a Teuchos::FancyOStream, and pass it into this method:

Reimplemented from Tpetra::DistObject< char, LO, GO, Node >.

Definition at line 3466 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

inline |

The number of degrees of freedom per mesh point.

Definition at line 337 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

virtual |

Get the (mesh) graph.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3990 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| void Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::applyBlock | ( | const BlockMultiVector< Scalar, LO, GO, Node > & | X, |

| BlockMultiVector< Scalar, LO, GO, Node > & | Y, | ||

| Teuchos::ETransp | mode = Teuchos::NO_TRANS, |

||

| const Scalar | alpha = Teuchos::ScalarTraits<Scalar>::one (), |

||

| const Scalar | beta = Teuchos::ScalarTraits<Scalar>::zero () |

||

| ) |

Version of apply() that takes BlockMultiVector input and output.

This method is deliberately not marked const, because it may do lazy initialization of temporary internal block multivectors.

Definition at line 962 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| void Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::gaussSeidelCopy | ( | MultiVector< Scalar, LO, GO, Node > & | X, |

| const ::Tpetra::MultiVector< Scalar, LO, GO, Node > & | B, | ||

| const ::Tpetra::MultiVector< Scalar, LO, GO, Node > & | D, | ||

| const Scalar & | dampingFactor, | ||

| const ESweepDirection | direction, | ||

| const int | numSweeps, | ||

| const bool | zeroInitialGuess | ||

| ) | const |

Version of gaussSeidel(), with fewer requirements on X.

Not Implemented

Definition at line 1253 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| void Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::reorderedGaussSeidelCopy | ( | ::Tpetra::MultiVector< Scalar, LO, GO, Node > & | X, |

| const ::Tpetra::MultiVector< Scalar, LO, GO, Node > & | B, | ||

| const ::Tpetra::MultiVector< Scalar, LO, GO, Node > & | D, | ||

| const Teuchos::ArrayView< LO > & | rowIndices, | ||

| const Scalar & | dampingFactor, | ||

| const ESweepDirection | direction, | ||

| const int | numSweeps, | ||

| const bool | zeroInitialGuess | ||

| ) | const |

Version of reorderedGaussSeidel(), with fewer requirements on X.

Not Implemented

Definition at line 1271 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| void Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::localGaussSeidel | ( | const BlockMultiVector< Scalar, LO, GO, Node > & | Residual, |

| BlockMultiVector< Scalar, LO, GO, Node > & | Solution, | ||

| const Kokkos::View< impl_scalar_type ***, device_type, Kokkos::MemoryUnmanaged > & | D_inv, | ||

| const Scalar & | omega, | ||

| const ESweepDirection | direction | ||

| ) | const |

Local Gauss-Seidel solve, given a factorized diagonal.

| Residual | [in] The "residual" (right-hand side) block (multi)vector |

| Solution | [in/out] On input: the initial guess / current approximate solution. On output: the new approximate solution. |

| D_inv | [in] Block diagonal, the explicit inverse of this matrix's block diagonal (possibly modified for algorithmic reasons). |

| omega | [in] (S)SOR relaxation coefficient |

| direction | [in] Forward, Backward, or Symmetric. |

One may access block i in D_inv using the following code:

The resulting block is b x b, where b = this->getBlockSize().

Definition at line 1142 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| LO Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::replaceLocalValues | ( | const LO | localRowInd, |

| const LO | colInds[], | ||

| const Scalar | vals[], | ||

| const LO | numColInds | ||

| ) | const |

Replace values at the given (mesh, i.e., block) column indices, in the given (mesh, i.e., block) row.

| localRowInd | [in] Local mesh (i.e., block) index of the row in which to replace. |

| colInds | [in] Local mesh (i.e., block) column ind{ex,ices} at which to replace values. colInds[k] is the local column index whose new values start at vals[getBlockSize() * getBlockSize() * k], and colInds has length at least numColInds. This method will only access the first numColInds entries of colInds. |

| vals | [in] The new values to use at the given column indices. Values for each block are stored contiguously, in row major layout, with no padding between rows or between blocks. Thus, if b = getBlockSize(), then vals[k*b*b] .. vals[(k+1)*b*b-1] are the values to use for block colInds[k]. |

| numColInds | [in] The number of entries of colInds. |

colInds. colInds[k] is valid if and only if it is a valid local mesh (i.e., block) column index. This method succeeded if and only if the return value equals the input argument numColInds. Definition at line 1021 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| LO Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::sumIntoLocalValues | ( | const LO | localRowInd, |

| const LO | colInds[], | ||

| const Scalar | vals[], | ||

| const LO | numColInds | ||

| ) | const |

Sum into values at the given (mesh, i.e., block) column indices, in the given (mesh, i.e., block) row.

| localRowInd | [in] Local mesh (i.e., block) index of the row in which to sum. |

| colInds | [in] Local mesh (i.e., block) column ind{ex,ices} at which to sum. colInds[k] is the local column index whose new values start at vals[getBlockSize() * getBlockSize() * k], and colInds has length at least numColInds. This method will only access the first numColInds entries of colInds. |

| vals | [in] The new values to sum in at the given column indices. Values for each block are stored contiguously, in row major layout, with no padding between rows or between blocks. Thus, if b = getBlockSize(), then vals[k*b*b] .. vals[(k+1)*b*b-1] are the values to use for block colInds[k]. |

| numColInds | [in] The number of entries of colInds. |

colInds. colInds[k] is valid if and only if it is a valid local mesh (i.e., block) column index. This method succeeded if and only if the return value equals the input argument numColInds. Definition at line 1452 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| LO Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::getLocalRowView | ( | const LO | localRowInd, |

| const LO *& | colInds, | ||

| Scalar *& | vals, | ||

| LO & | numInds | ||

| ) | const |

Get a view of the (mesh, i.e., block) row, using local (mesh, i.e., block) indices.

This matrix has a graph, and we assume that the graph is fill complete on input to the matrix's constructor. Thus, the matrix has a column Map, and it stores column indices as local indices. This means you can view the column indices as local indices directly. However, you may not view them as global indices directly, since the column indices are not stored as global indices in the graph.

| localRowInd | [in] Local (mesh, i.e., block) row index. |

| colInds | [out] If localRowInd is valid on the calling process, then on output, this is a pointer to the local (mesh, i.e., block) column indices in the given (mesh, i.e., block) row. If localRowInd is not valid, then this is undefined. (Please check the return value of this method.) |

| vals | [out] If localRowInd is valid on the calling process, then on output, this is a pointer to the row's values. If localRowInd is not valid, then this is undefined. (Please check the return value of this method.) |

| numInds | [in] The number of (mesh, i.e., block) indices in colInds on output. |

localRowInd is valid, else Teuchos::OrdinalTraits<LO>::invalid(). Definition at line 1525 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Not implemented.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3903 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Not implemented.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 1568 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| LO Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::getLocalRowOffsets | ( | const LO | localRowInd, |

| ptrdiff_t | offsets[], | ||

| const LO | colInds[], | ||

| const LO | numColInds | ||

| ) | const |

Get relative offsets corresponding to the given rows, given by local row index.

The point of this method is to precompute the results of searching for the offsets corresponding to the given column indices. You may then reuse these search results in replaceLocalValuesByOffsets or sumIntoLocalValuesByOffsets.

Offsets are block offsets; they are for column indices, not for values.

| localRowInd | [in] Local index of the row. |

| offsets | [out] On output: relative offsets corresponding to the given column indices. Must have at least numColInds entries. |

| colInds | [in] The local column indices for which to compute offsets. Must have at least numColInds entries. This method will only read the first numColsInds entries. |

| numColInds | [in] Number of entries in colInds to read. |

Definition at line 1594 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| LO Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::replaceLocalValuesByOffsets | ( | const LO | localRowInd, |

| const ptrdiff_t | offsets[], | ||

| const Scalar | vals[], | ||

| const LO | numOffsets | ||

| ) | const |

Like replaceLocalValues, but avoids computing row offsets.

Definition at line 1627 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| LO Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::sumIntoLocalValuesByOffsets | ( | const LO | localRowInd, |

| const ptrdiff_t | offsets[], | ||

| const Scalar | vals[], | ||

| const LO | numOffsets | ||

| ) | const |

Like sumIntoLocalValues, but avoids computing row offsets.

Definition at line 1705 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Return the number of entries in the given row on the calling process.

If the given local row index is invalid, this method (sensibly) returns zero, since the calling process trivially does not own any entries in that row.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 1746 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

inline |

Whether this object had an error on the calling process.

Import and Export operations using this object as the target of the Import or Export may incur local errors, if some process encounters an LID in its list which is not a valid mesh row local index on that process. In that case, we don't want to throw an exception, because not all processes may throw an exception; this can result in deadlock or put Tpetra in an incorrect state, due to lack of consistency across processes. Instead, we set a local error flag and ignore the incorrect data. When unpacking, we do the same with invalid column indices. If you want to check whether some process experienced an error, you must do a reduction or all-reduce over this flag. Every time you initiate a new Import or Export with this object as the target, we clear this flag. (Note to developers: we clear it at the beginning of checkSizes().)

Definition at line 597 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

inline |

The current stream of error messages.

This is only nonempty on the calling process if localError() returns true. In that case, it stores a stream of human-readable, endline-separated error messages encountered during an Import or Export cycle. Every time you initiate a new Import or Export with this object as the target, we clear this stream. (Note to developers: we clear it at the beginning of checkSizes().)

If you want to print this, you are responsible for ensuring that it is valid for the calling MPI process to print to whatever output stream you use. On some MPI implementations, you may need to send the string to Process 0 for printing.

Definition at line 615 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

| void Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::getLocalDiagOffsets | ( | const Kokkos::View< size_t *, device_type, Kokkos::MemoryUnmanaged > & | offsets | ) | const |

Get offsets of the diagonal entries in the matrix.

offsets.extent(0) == getNodeNumRows()This method creates an array of offsets of the local diagonal entries in the matrix. This array is suitable for use in the two-argument version of getLocalDiagCopy(). However, its contents are not defined in any other context. For example, you should not rely on offsets(i) being the index of the diagonal entry in the views returned by getLocalRowView(). This may be the case, but it need not be. (For example, we may choose to optimize the lookups down to the optimized storage level, in which case the offsets will be computed with respect to the underlying storage format, rather than with respect to the views.)

If the matrix has a const ("static") graph, and if that graph is fill complete, then the offsets array remains valid through calls to fillComplete() and resumeFill(). "Invalidates" means that you must call this method again to recompute the offsets.

Definition at line 1093 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| void Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::getLocalDiagCopy | ( | const Kokkos::View< impl_scalar_type ***, device_type, Kokkos::MemoryUnmanaged > & | diag, |

| const Kokkos::View< const size_t *, device_type, Kokkos::MemoryUnmanaged > & | offsets | ||

| ) | const |

Variant of getLocalDiagCopy() that uses precomputed offsets and puts diagonal blocks in a 3-D Kokkos::View.

| diag | [out] On input: Must be preallocated, with dimensions at least (number of diagonal blocks on the calling process) x getBlockSize() x getBlockSize(). On output: the diagonal blocks. Leftmost index is "which block," then the row index within a block, then the column index within a block. |

This method uses the offsets of the diagonal entries, as precomputed by getLocalDiagOffsets(), to speed up copying the diagonal of the matrix.

Definition at line 1322 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

| void Tpetra::Experimental::BlockCrsMatrix< Scalar, LO, GO, Node >::getLocalDiagCopy | ( | const Kokkos::View< impl_scalar_type ***, device_type, Kokkos::MemoryUnmanaged > & | diag, |

| const Teuchos::ArrayView< const size_t > & | offsets | ||

| ) | const |

Variant of getLocalDiagCopy() that uses precomputed offsets and puts diagonal blocks in a 3-D Kokkos::View.

| diag | [out] On input: Must be preallocated, with dimensions at least (number of diagonal blocks on the calling process) x getBlockSize() x getBlockSize(). On output: the diagonal blocks. Leftmost index is "which block," then the row index within a block, then the column index within a block. |

This method uses the offsets of the diagonal entries, as precomputed by getLocalDiagOffsets(), to speed up copying the diagonal of the matrix.

Definition at line 1372 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

protected |

Like sumIntoLocalValues, but for the ABSMAX combine mode.

Definition at line 1409 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

protected |

Like sumIntoLocalValuesByOffsets, but for the ABSMAX combine mode.

Definition at line 1666 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

inline |

Mark the matrix's valueas as modified in host space.

Definition at line 943 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

inline |

Mark the matrix's valueas as modified in device space.

Definition at line 949 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

inline |

Mark the matrix's values as modified in the given memory space.

Definition at line 956 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

inline |

Whether the matrix's values need sync'ing to host space.

Definition at line 967 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

inline |

Whether the matrix's values need sync'ing to device space.

Definition at line 973 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

inline |

Whether the matrix's values need sync'ing to the given memory space.

Definition at line 980 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

inline |

Sync the matrix's values to host space.

Definition at line 991 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

inline |

Sync the matrix's values to device space.

Definition at line 997 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

inline |

Sync the matrix's values to the given memory space.

Definition at line 1004 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

inline |

Get the host or device View of the matrix's values (val_).

| MemorySpace | Memory space for which to get the Kokkos::View. |

This is not const, because we reserve the right to do lazy allocation on device / "fast" memory. Host / "slow" memory allocations generally are not lazy; that way, the host fill interface always works in a thread-parallel context without needing to synchronize on the allocation.

CT: While we reserved the "right" we ignored this and explicitly did const cast away Hence I made the non-templated functions [getValuesHost and getValuesDevice; see above] const.

Definition at line 1045 of file Tpetra_Experimental_BlockCrsMatrix_decl.hpp.

|

virtual |

The communicator over which this matrix is distributed.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3719 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

The global number of columns of this matrix.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3739 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

The number of columns needed to apply the forward operator on this node.

This is the same as the number of elements listed in the column Map. It is not necessarily the same as the number of domain Map elements owned by the calling process.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3747 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

The index base for global indices in this matrix.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3755 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

The global number of stored (structurally nonzero) entries.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3763 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

The local number of stored (structurally nonzero) entries.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3771 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

The current number of entries on the calling process in the specified global row.

Note that if the row Map is overlapping, then the calling process might not necessarily store all the entries in the row. Some other process might have the rest of the entries.

Teuchos::OrdinalTraits<size_t>::invalid() if the specified global row does not belong to this graph, else the number of entries. Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3779 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

The maximum number of entries in any row over all processes in the matrix's communicator.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3807 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Whether this matrix has a well-defined column Map.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3815 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Whether matrix indices are locally indexed.

A RowMatrix may store column indices either as global indices (of type GO), or as local indices (of type LO). In some cases (for example, if the column Map has not been computed), it is not possible to switch from global to local indices without extra work. Furthermore, some operations only work for one or the other case.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3843 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Whether matrix indices are globally indexed.

A RowMatrix may store column indices either as global indices (of type GO), or as local indices (of type LO). In some cases (for example, if the column Map has not been computed), it is not possible to switch from global to local indices without extra work. Furthermore, some operations only work for one or the other case.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3851 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Whether fillComplete() has been called.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3859 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Whether this object implements getLocalRowView() and getGlobalRowView().

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3867 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Get a copy of the given global row's entries.

This method only gets the entries in the given row that are stored on the calling process. Note that if the matrix has an overlapping row Map, it is possible that the calling process does not store all the entries in that row.

| GlobalRow | [in] Global index of the row. |

| Indices | [out] Global indices of the columns corresponding to values. |

| Values | [out] Matrix values. |

| NumEntries | [out] Number of stored entries on the calling process; length of Indices and Values. |

This method throws std::runtime_error if either Indices or Values is not large enough to hold the data associated with row GlobalRow. If GlobalRow does not belong to the calling process, then the method sets NumIndices to Teuchos::OrdinalTraits<size_t>::invalid(), and does not modify Indices or Values.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3876 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Get a constant, nonpersisting, globally indexed view of the given row of the matrix.

The returned views of the column indices and values are not guaranteed to persist beyond the lifetime of this. Furthermore, some RowMatrix implementations allow changing the values, or the indices and values. Any such changes invalidate the returned views.

This method only gets the entries in the given row that are stored on the calling process. Note that if the matrix has an overlapping row Map, it is possible that the calling process does not store all the entries in that row.

isGloballyIndexed () && supportsRowViews () indices.size () == getNumEntriesInGlobalRow (GlobalRow)| GlobalRow | [in] Global index of the row. |

| Indices | [out] Global indices of the columns corresponding to values. |

| Values | [out] Matrix values. |

If GlobalRow does not belong to this node, then indices is set to null.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3890 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Get a copy of the diagonal entries, distributed by the row Map.

On input, the Vector's Map must be the same as the row Map of the matrix. (That is, this->getRowMap ()->isSameAs (* (diag.getMap ())) == true.)

On return, the entries of diag are filled with the diagonal entries of the matrix stored on this process. Note that if the row Map is overlapping, multiple processes may own the same diagonal element. You may combine these overlapping diagonal elements by doing an Export from the row Map Vector to a range Map Vector.

Definition at line 3915 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Scale the RowMatrix on the left with the given Vector x.

On return, for all entries i,j in the matrix,  .

.

Definition at line 3968 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtual |

Scale the RowMatrix on the right with the given Vector x.

On return, for all entries i,j in the matrix,  .

.

Definition at line 3979 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

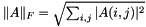

virtual |

The Frobenius norm of the matrix.

This method computes and returns the Frobenius norm of the matrix. The Frobenius norm  for the matrix

for the matrix  is defined as

is defined as  . It has the same value as the Euclidean norm of a vector made by stacking the columns of

. It has the same value as the Euclidean norm of a vector made by stacking the columns of  .

.

Implements Tpetra::RowMatrix< Scalar, LO, GO, Node >.

Definition at line 3998 of file Tpetra_Experimental_BlockCrsMatrix_def.hpp.

|

virtualinherited |

Get a constant, nonpersisting, locally indexed view of the given row of the matrix, using "raw" pointers instead of Teuchos::ArrayView.

The returned views of the column indices and values are not guaranteed to persist beyond the lifetime of this. Furthermore, some RowMatrix implementations allow changing the values, or the indices and values. Any such changes invalidate the returned views.

This method only gets the entries in the given row that are stored on the calling process. Note that if the matrix has an overlapping row Map, it is possible that the calling process does not store all the entries in that row.

isLocallyIndexed () && supportsRowViews () numEnt == getNumEntriesInGlobalRow (LocalRow)| lclRow | [in] Local index of the row. |

| numEnt | [out] Number of entries in the row that are stored on the calling process. |

| lclColInds | [out] Local indices of the columns corresponding to values. |

| vals | [out] Matrix values. |

|

pure virtualinherited |

Get a copy of the diagonal entries, distributed by the row Map.

On input, the Vector's Map must be the same as the row Map of the matrix. (That is, this->getRowMap ()->isSameAs (* (diag.getMap ())) == true.)

On return, the entries of diag are filled with the diagonal entries of the matrix stored on this process. Note that if the row Map is overlapping, multiple processes may own the same diagonal element. You may combine these overlapping diagonal elements by doing an Export from the row Map Vector to a range Map Vector.

|

pure virtualinherited |

Scale the matrix on the left with the given Vector.

On return, for all entries i,j in the matrix,  .

.

|

pure virtualinherited |

Scale the matrix on the right with the given Vector.

On return, for all entries i,j in the matrix,  .

.

|

virtualinherited |

Return a new RowMatrix which is the result of beta*this + alpha*A.

The new RowMatrix is actually a CrsMatrix (which see). Note that RowMatrix is a read-only interface (not counting the left and right scale methods), so it is impossible to implement an in-place add using just that interface.

For brevity, call this matrix B, and the result matrix C. C's row Map will be identical to B's row Map. It is correct, though less efficient, for A and B not to have the same row Maps. We could make C's row Map the union of the two row Maps in that case. However, we don't want row Maps to grow for a repeated sequence of additions with matrices with different row Maps. Furthermore, the fact that the user called this method on B, rather than on A, suggests a preference for using B's distribution. The most reasonable thing to do, then, is to use B's row Map for C.

A and B must have identical or congruent communicators. This method must be called as a collective over B's communicator.

The parameters are optional and may be null. Here are the parameters that this function accepts:

bool): If true, call fillComplete on the result matrix C. This is true by default.It is not strictly necessary that a RowMatrix always have a domain and range Map. For example, a CrsMatrix does not have a domain and range Map until after its first fillComplete call. Neither A nor B need to have a domain and range Map in order to call add(). If at least one of them has a domain and range Map, you need not supply a domain and range Map to this method. If you ask this method to call fillComplete on C (it does by default), it will supply the any missing domain or range Maps from either B's or A's (in that order) domain and range Maps. If neither A nor B have a domain and range Map, and if you ask this method to call fillComplete, then you must supply both a domain Map and a range Map to this method.

This method comes with a default implementation, since the RowMatrix interface suffices for implementing it. Subclasses (like CrsMatrix) may override this implementation, for example to improve its performance, given additional knowledge about the subclass. Subclass implementations may need to do a dynamic cast on A in order to know its type.

|

virtualinherited |

Pack this object's data for an Import or Export.

Subclasses may override this method to speed up or otherwise improve the implementation by exploiting more specific details of the subclass.

Implements Tpetra::Packable< char, LO >.

|

pure virtualinherited |

Computes the operator-multivector application.

Loosely, performs  . However, the details of operation vary according to the values of

. However, the details of operation vary according to the values of alpha and beta. Specifically

|

inherited |

Import data into this object using an Import object ("forward mode").

The input DistObject is always the source of the data redistribution operation, and the *this object is always the target.

If you don't know the difference between forward and reverse mode, then you probably want forward mode. Use this method with your precomputed Import object if you want to do an Import, else use doExport() with a precomputed Export object.

"Restricted Mode" does two things:

*this, in a "locallyFitted" sense. This cannot be used if (2) is not true, OR there are permutes. The "source" maps still need to match.

| source | [in] The "source" object for redistribution. |

| importer | [in] Precomputed data redistribution plan. Its source Map must be the same as the input DistObject's Map, and its target Map must be the same as this->getMap(). |

| CM | [in] How to combine incoming data with the same global index. |

|

inherited |

Import data into this object using an Export object ("reverse mode").

The input DistObject is always the source of the data redistribution operation, and the *this object is always the target.

If you don't know the difference between forward and reverse mode, then you probably want forward mode. Use the version of doImport() that takes a precomputed Import object in that case.

"Restricted Mode" does two things:

*this, in a "locallyFitted" sense. This cannot be used if (2) is not true, OR there are permutes. The "source" maps still need to match.

| source | [in] The "source" object for redistribution. |

| exporter | [in] Precomputed data redistribution plan. Its target Map must be the same as the input DistObject's Map, and its source Map must be the same as this->getMap(). (Note the difference from forward mode.) |

| CM | [in] How to combine incoming data with the same global index. |

|

inherited |

Export data into this object using an Export object ("forward mode").

The input DistObject is always the source of the data redistribution operation, and the *this object is always the target.

If you don't know the difference between forward and reverse mode, then you probably want forward mode. Use this method with your precomputed Export object if you want to do an Export, else use doImport() with a precomputed Import object.

"Restricted Mode" does two things:

*this, in a "locallyFitted" sense. This cannot be used if (2) is not true, OR there are permutes. The "source" maps still need to match.

| source | [in] The "source" object for redistribution. |

| exporter | [in] Precomputed data redistribution plan. Its source Map must be the same as the input DistObject's Map, and its target Map must be the same as this->getMap(). |

| CM | [in] How to combine incoming data with the same global index. |

|

inherited |

Export data into this object using an Import object ("reverse mode").

The input DistObject is always the source of the data redistribution operation, and the *this object is always the target.

If you don't know the difference between forward and reverse mode, then you probably want forward mode. Use the version of doExport() that takes a precomputed Export object in that case.

"Restricted Mode" does two things:

*this, in a "locallyFitted" sense. This cannot be used if (2) is not true, OR there are permutes. The "source" maps still need to match.

| source | [in] The "source" object for redistribution. |

| importer | [in] Precomputed data redistribution plan. Its target Map must be the same as the input DistObject's Map, and its source Map must be the same as this->getMap(). (Note the difference from forward mode.) |

| CM | [in] How to combine incoming data with the same global index. |

|

inherited |

Whether this is a globally distributed object.

For a definition of "globally distributed" (and its opposite, "locally replicated"), see the documentation of Map's isDistributed() method.

|

inlinevirtualinherited |

The Map describing the parallel distribution of this object.

Note that some Tpetra objects might be distributed using multiple Map objects. For example, CrsMatrix has both a row Map and a column Map. It is up to the subclass to decide which Map to use when invoking the DistObject constructor.

Definition at line 541 of file Tpetra_DistObject_decl.hpp.

|

inherited |

Print this object to the given output stream.

We generally assume that all MPI processes can print to the given stream.

|

virtualinherited |

Remove processes which contain no entries in this object's Map.

On input, this object is distributed over the Map returned by getMap() (the "original Map," with its communicator, the "original communicator"). The input newMap of this method must be the same as the result of calling getMap()->removeEmptyProcesses(). On processes in the original communicator which contain zero entries ("excluded processes," as opposed to "included processes"), the input newMap must be Teuchos::null (which is what getMap()->removeEmptyProcesses() returns anyway).

On included processes, reassign this object's Map (that would be returned by getMap()) to the input newMap, and do any work that needs to be done to restore correct semantics. On excluded processes, free any data that needs freeing, and do any other work that needs to be done to restore correct semantics.

This method has collective semantics over the original communicator. On exit, the only method of this object which is safe to call on excluded processes is the destructor. This implies that subclasses' destructors must not contain communication operations.

|

protectedvirtualinherited |

Whether the implementation's instance promises always to have a constant number of packets per LID (local index), and if so, how many packets per LID there are.

If this method returns zero, the instance says that it might possibly have a different number of packets for each LID (local index) to send or receive. If it returns nonzero, the instance promises that the number of packets is the same for all LIDs, and that the return value is this number of packets per LID.

The default implementation of this method returns zero. This does not affect the behavior of doTransfer() in any way. If a nondefault implementation returns nonzero, doTransfer() will use this information to avoid unnecessary allocation and / or resizing of arrays.

|

protectedvirtualinherited |

Redistribute data across (MPI) processes.

| src | [in] The source object, to redistribute into the target object, which is *this object. |

| transfer | [in] The Export or Import object representing the communication pattern. (Details::Transfer is the common base class of these two objects.) |

| modeString | [in] Human-readable string, for verbose debugging output and error output, explaining what function called this method. Example: "doImport (forward)", "doExport (reverse)". |

| revOp | [in] Whether to do a forward or reverse mode redistribution. |

| CM | [in] The combine mode that describes how to combine values that map to the same global ID on the same process. |

|

protectedvirtualinherited |

Reallocate numExportPacketsPerLID_ and/or numImportPacketsPerLID_, if necessary.

| numExportLIDs | [in] Number of entries in the exportLIDs input array argument of doTransfer(). |

| numImportLIDs | [in] Number of entries in the remoteLIDs input array argument of doTransfer(). |

|

protectedvirtualinherited |

Implementation detail of doTransfer.

LID DualViews come from the Transfer object given to doTransfer. They are always sync'd on both host and device. Users must never attempt to modify or sync them.

|

protectedpure virtualinherited |

Compare the source and target (this) objects for compatibility.

|

protectedvirtualinherited |

Perform copies and permutations that are local to the calling (MPI) process.

Subclasses must reimplement this function. Its default implementation does nothing. Note that the <t>target object of the Export or Import, namely *this, packs the source object's data.

permuteToLIDs.need_sync_host(), permuteToLIDs.need_sync_device(), permuteFromLIDs.need_sync_host(), and permuteFromLIDs.need_sync_device() are all false.| source | [in] On entry, the source object of the Export or Import operation. |

| numSameIDs | [in] The number of elements that are the same on the source and target objects. These elements live on the same process in both the source and target objects. |

| permuteToLIDs | [in] List of the elements that are permuted. They are listed by their local index (LID) in the destination object. |

| permuteFromLIDs | [in] List of the elements that are permuted. They are listed by their local index (LID) in the source object. |

|

protectedvirtualinherited |

Pack data and metadata for communication (sends).

Subclasses must reimplement this function. Its default implementation does nothing. Note that the <t>target object of the Export or Import, namely *this, packs the source object's data.

exportLIDs.need_sync_host () and exportLIDs.need_sync_device() are both false.| source | [in] Source object for the redistribution. |

| exportLIDs | [in] List of the entries (as local IDs in the source object) that Tpetra will send to other processes. |

| exports | [out] On exit, the packed data to send. Implementations must reallocate this as needed (prefer reusing the existing allocation if possible), and may modify and/or sync this wherever they like. |

| numPacketsPerLID | [out] On exit, the implementation of this method must do one of two things: either set numPacketsPerLID[i] to the number of packets to be packed for exportLIDs[i] and set constantNumPackets to zero, or set constantNumPackets to a nonzero value. If the latter, the implementation must not modify the entries of numPacketsPerLID. If the former, the implementation may sync numPacketsPerLID this wherever it likes, either to host or to device. The allocation belongs to DistObject, not to subclasses; don't be tempted to change this to pass by reference. |

| constantNumPackets | [out] On exit, 0 if the number of packets per LID could differ, else (if nonzero) the number of packets per LID (which must be constant). |

| distor | [in] The Distributor object we are using. Most implementations will not use this. |

|

protectedvirtualinherited |

Perform any unpacking and combining after communication.

Subclasses must reimplement this function. Its default implementation does nothing. Note that the <t>target object of the Export or Import, namely *this, unpacks the received data into itself, possibly modifying its entries.

importLIDs.need_sync_host () and importLIDs.need_sync_device() are both false.| importLIDs | [in] List of the entries (as LIDs in the destination object) we received from other processes. |